When working with coding agents it's essential to provide them with the signals they need to determine whether they've done the right job or not. The typical signals come from inspecting the generated code, type-checking, linting, and running tests. What's missing though is access to the application itself. If we could provide the agent with the tools needed to inspect and interact with the application at runtime, it would allow it to check its work directly rather than infer it from the code.

There's been a lot of buzz lately about playwright-mcp which does exactly that. I figured I'd try it out this weekend but turns out it doesn't play nicely with Electron, which the app I'm currently hacking on is using. This gave me an idea though, what if we could leverage the Chrome DevTools Protocol (CDP) in a more direct fashion?

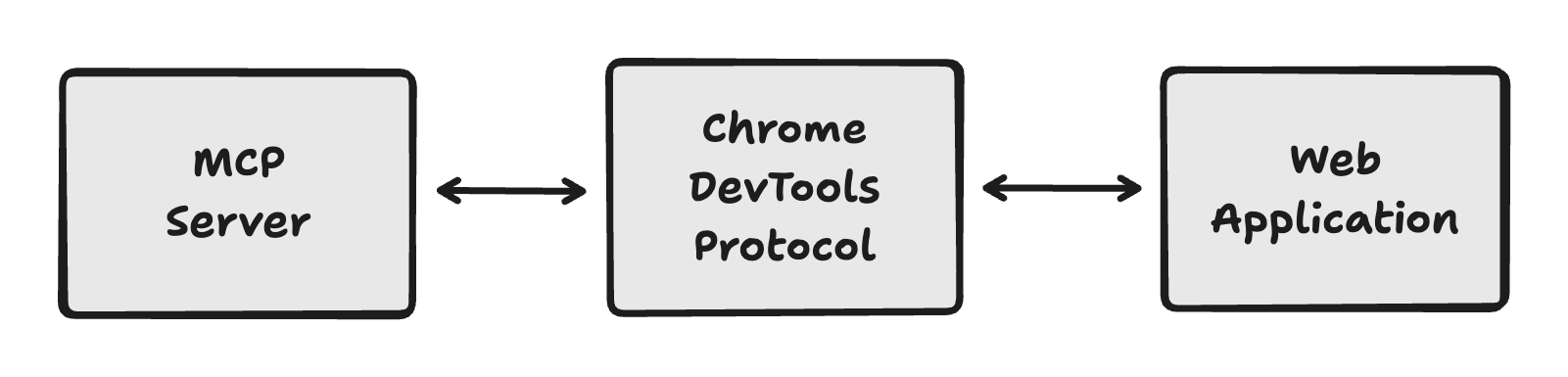

I did some experimentation and turns out that by creating a simple MCP server that hooks up directly to CDP, you can give any agent virtually full browser access, all through one single tool. In this post I'll walk through how that can be done. If you'd rather jump straight in and give it a try, head over to this repo and grab the code and instructions.

Overview of CDP

CDP is a protocol that Chrome uses to expose the DevTools API via a websocket. By connecting to this websocket and sending messages, it allows an external client to programmatically do the things DevTools can do. This includes everything from inspecting your web page to executing code. For all the power that CDP provides, the protocol is incredibly simple and very LLM-friendly. Here's an example of a CDP message that retrieves the title of a page by running a snippet of javascript:

{

"id": 2,

"method": "Runtime.evaluate",

"params": {

"expression": "document.title",

"returnByValue": false

}

}And the response:

{

"id": 2,

"result": {

"type": "string",

"value": "Welcome to yolo",

"description": "Welcome to yolo"

}

}The input is a JSON formatted message that takes a method field and some params that the method expects. The available methods can all be found here. There are a lot of methods capable of doing many things, but this is essentially all you need to know to understand how the MCP tool will communicate with the browser.

Building the MCP server

Now let's write the code that will expose CDP to our coding agent. The full code can be found here.

First, we'll create a simple CDP client. All it does is:

- Connects and maintains a websocket connection to the browser.

- Adds a thin layer that abstracts away the ID tracking of messages.

The important part is that it gives us a method that looks like this:

async sendCommand(method: string, params?: any): Promise<CDPResponse> {

// ...

}

Where CDPResponse is a simple type with the shape of a CDP message response:

export interface CDPResponse {

id: number;

result?: any;

error?: {

code: number;

message: string;

};

}Next we'll expose our sendCommand() method via an MCP server. I'll leave out some of the details here but this is straightforward using the official SDK:

this.server.setRequestHandler(ListToolsRequestSchema, async () => {

return {

tools: [

{

name: "cdp_command",

description:

"Send a Chrome DevTools Protocol command to the connected page",

inputSchema: {

type: "object",

properties: {

method: {

type: "string",

description:

'Any CDP command name (e.g., "Runtime.evaluate", "Page.navigate")',

},

params: {

type: "string",

description: "JSON string of parameters for the CDP command",

default: "{}",

},

},

required: ["method"],

},

},

] as Tool[],

};

});

this.server.setRequestHandler(CallToolRequestSchema, async (request) => {

switch (name) {

case "cdp_command":

return await this.handleCdpCommand(args);

default:

throw new Error(`Unknown tool: ${name}`);

}

});As you can see, we're providing a single tool that essentially says "send any CDP command you want", giving the AI direct low-level access to the entire DevTools API.

Hooking it up to Claude Code

You can use this MCP server with any MCP client such as cursor or windsurf. In this example we'll set it up in Claude Code. Here's the step by step:

1. Clone the repo to a local folder e.g. ~/devtools-mcp.

2. Install dependencies: ~/devtools-mcp npm install.

3. In your working project, add the MCP server to Claude Code: claude mcp add cdp-server -- npx tsx ~/devtools-mcp/index.ts.

When Claude Code starts, it will now automatically launch and connect to the MCP server.

Taking it for a spin

Now that Claude Code has DevTools access, it should in theory be able to figure out which command or sequence of commands to perform to achieve certain goals. Let's try it out. Below are trimmed logs from giving Claude Code a couple of different prompts in the repo for this blog site.

Find interactive elements

> which elements are interactible?

⏺ I'll use CDP to identify all interactive elements on the page.

⏺ cdp-server:cdp_command (MCP)(method: "Runtime.evaluate", params: "{

// Code omitted for brevity

}")

⏺ The page has the following interactive elements:

1. Navigation links:

- "autoconfig" logo/link at the top

- Email icon link (mailto:mailto:stefan@autoconfig.io) in top right

2. Main article link:

- The article title "Give your coding agent browser superpowers with DevTools access via MCP"

3. Anchor links (for sections within the article):

- Overview of CDP

- Building the MCP server

- Hooking it up to Claude Code

...

Taking screenshots

> what do you see on the page

⏺ I'll use the CDP command to take a screenshot of the current page.

⏺ cdp-server:cdp_command (MCP)(method: "Page.captureScreenshot", params: "{}")…

⎿ {

"id": 1,

"result": {

"data": "[binary data saved to: cdp-output/data_1748889874144_d9259498.png]"

}

}

⏺ Read(cdp-output/data_1748889874144_d9259498.png)…

⎿ Read image (234.6KB)

⏺ I can see a blog post on autoconfig's website titled "Give your coding agent browser superpowers with DevTools access via MCP" dated June 2, 2025. The article discusses...

Check website performance

> analyze the website for rendering performance

⏺ I'll analyze the website's rendering performance using Chrome DevTools Protocol to gather performance metrics and identify potential issues.

⏺ Update Todos

⎿ ☐ Enable performance monitoring and collect metrics

☐ Analyze render blocking resources

☐ Measure Core Web Vitals

☐ Check layout and paint performance

☐ Analyze network performance and resource loading

⏺ cdp-server:cdp_command (MCP)(method: "Runtime.evaluate", params: "{\"expression\": \"JSON.stringify(performance.getEntriesByType('navigation')[0])\"}")…

⎿ {

"id": 6,

"result": {

"result": {

… +5 lines (ctrl+r to expand)

⏺ Update Todos

⎿ ☒ Enable performance monitoring and collect metrics

☐ Analyze render blocking resources

... (omitted lots of calls here)

⏺ Performance Analysis Summary

✅ Core Web Vitals (All Good)

- FCP: 72ms (Good) - First Contentful Paint

- LCP: 0ms (Good) - No large content detected

- CLS: 0 (Good) - No layout shifts

- TTFB: 40.2ms (Good) - Time to First Byte

📊 Key Metrics

- Total Load Time: 236.8ms

- DOM Interactive: 62.9ms

Closing the loop

It turns out that simply giving Claude CDP access works really well. Perhaps this shouldn't come as a surprise considering how LLM-friendly the protocol is:

- All the commands are specified using pure text.

- The responses are also pure text which allows the agent to iterate on the command or take a different approach if it fails.

- The CDP specification has been around for along time and models have a strong understanding of available commands and how to string them together to achieve a certain goal.

- The ability to execute arbitrary code allows the LLM to be creative with its solutions.

With that said keep in mind that this is a very bare-bones solution in its current state. It should be considered a starting point for further experimentation rather than something that is ready for prime time. One limitation is that the responses can easily blow up and exceed the context limits (e.g. full DOM retrievals). The agent can detect this though and in my experience often finds an alternative approach for the given task, e.g., constraining its query.

Also note that certain CDP methods (e.g., taking screenshots) return base64 encoded binary data. This can be handled by detecting those responses and writing that data to disk. The data field is then swapped out with a path to the file before the response is handed over to the LLM. This allows the agent to use its native file retrieval tool.

There are many other special cases and optimizations that can be made to improve this solution. With time however, the effectiveness of this approach should scale as the power and context limits of models increase.